This post was inspired by an answer Vitalik gave to a recent reddit AMA. I wrote a similar article in 2021, but I update it for the new circumstances.

Gas Limit

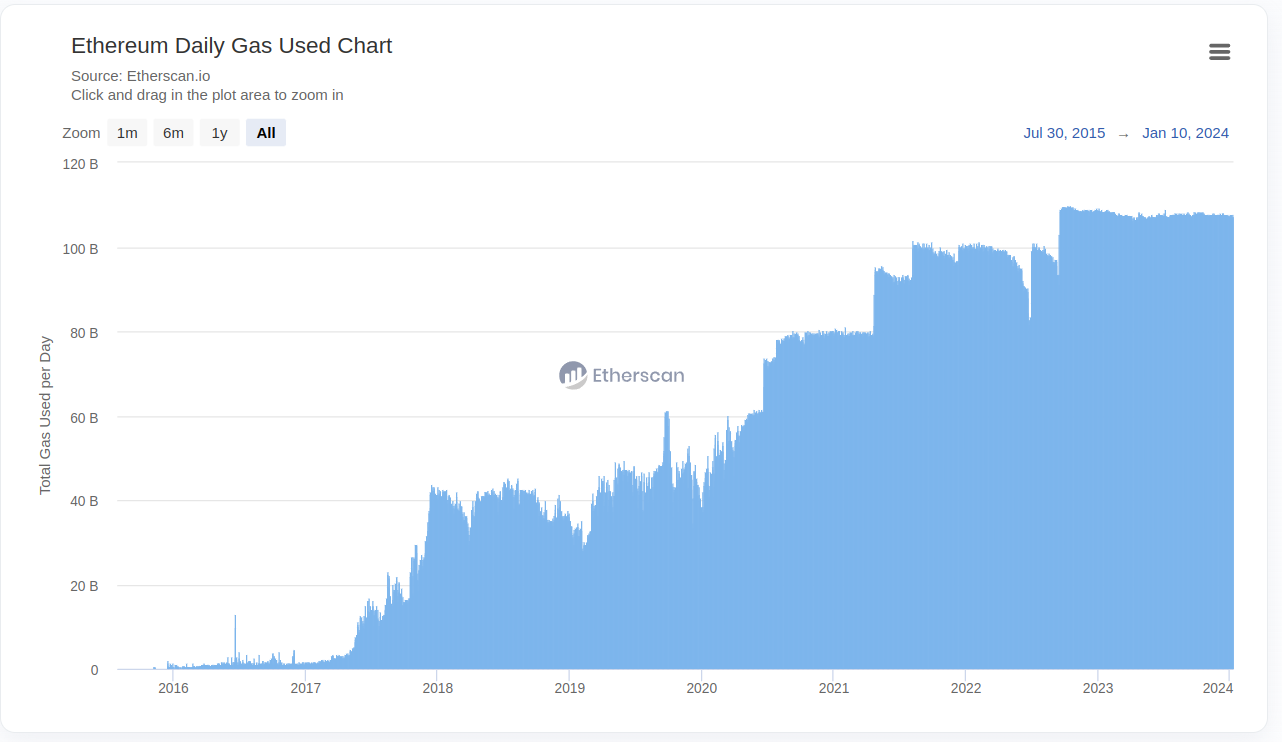

The gas limit determines how much work can be done in a block, thus how many transactions can be executed per block. Raising the gas limit would result in Ethereum being able to handle a higher transaction throughput or more complex transactions. The gas limit is historically a number that the miners/stakers can influence and has been increased over the years. The following chart by etherscan.io shows the historical gas usage (which is very close to the gaslimit, since all gaslimit increases have been eaten up by the market).

Risks

There are several risks associated with raising the gas limit right now.

Missed block rate

My previous article mentioned the uncle rate as one of the most discussed metrics when assessing a gas limit increase. Now, after the merge, we don’t have any uncles anymore. The only real way we can see if nodes are handling the current gas limit well is if we look at the rate of missed blocks. This is a flawed metric though, since it only shows us the nodes that are currently under-provisioned. It fails to give us a good indicator for a gas limit increase, also it only really shows the average case, not the worst case blocks that could happen during an attack.

State size

At block 18418786 (on 24.10.2023), the account snapshot was 10.33GB and the storage snapshot was 76.59GB, so the overall state was roughly 87GB. At block 17419840 (06.06.2023) the state was slightly less than 80GB. This would imply a state growth of roughly 7GB in 4 months or almost 2GB per month.

If we were to extrapolate that with 87+(2*12*#years), the state will be 111GB in a year, 207 in five years. The problem here is not the size itself. Everyone will be able to store that amount of data, however accessing and modifying it will become slower and slower.

And this is only the snapshot, which is the plain state. Geth needs to store this state in a different form as well in order to verify the state root. This other format (the trie nodes) needs roughly 180GB at block 18418786.

Thus the total size of space needed right now is roughly 267GB only for the state. If we increase the gas limit, this size will grow even quicker.

The problem with state growth is that, unlike history, we have no clear path to removing state. There are no concrete state-expiry proposals that we can quickly implement to get rid of the ever-growing state.

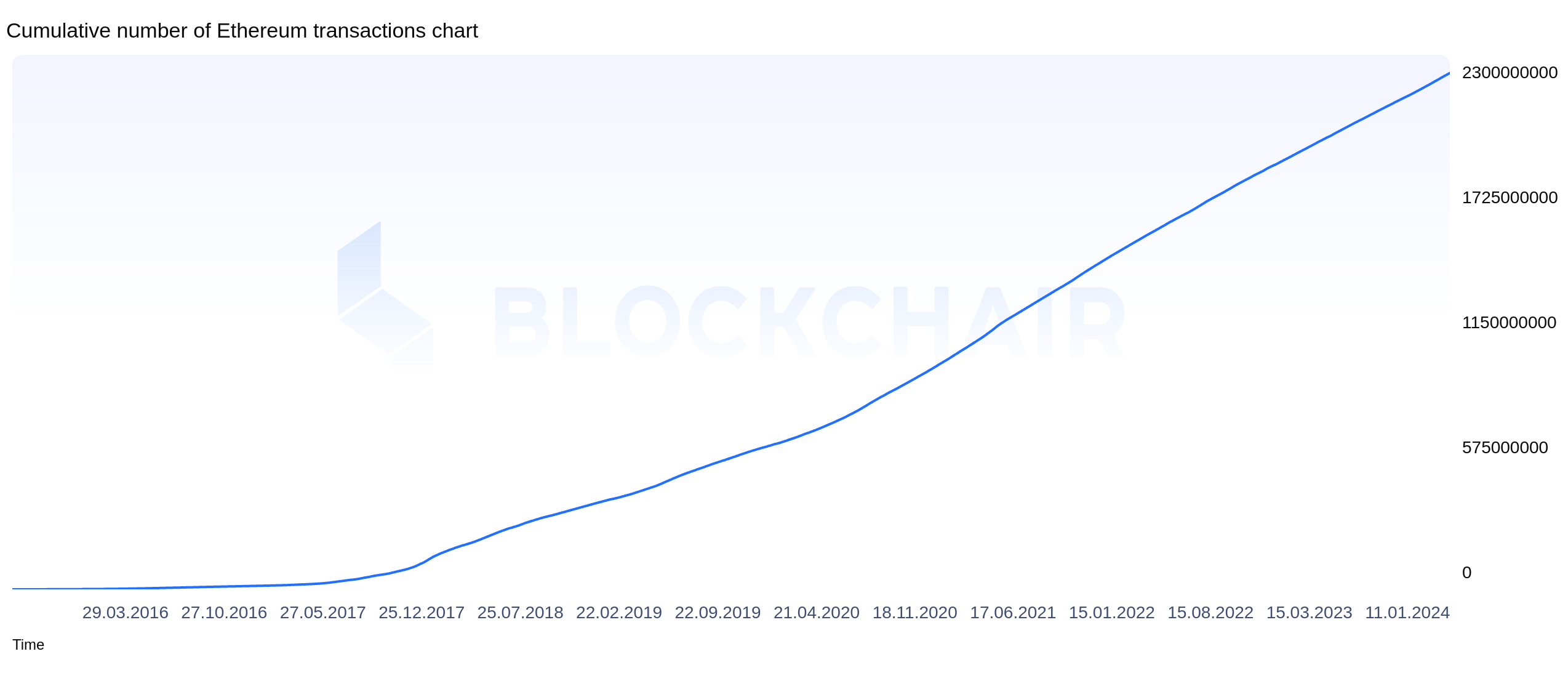

History size

In my previous post in February 2021, a full geth node was ~350GB (freshly pruned). Almost three years later a full geth node (on pbss) is over 900GB. The following chart shows the cumulative number of transactions. It’s easy to see that the transaction count more than doubled in three years from roughly 980 million to over 2.2 billion transactions.

With the rise of L2s, the history size has become more of an problem since their way to store data right now (before 4844 goes live) is calldata. The bodies at block 18418786 were over 427GB at Block 17419840 (again 4 months earlier) they were 339GB, this implies a growth of 28GB in 4 months or roughly 9GB per month.

We can extrapolate this growth again with 427+(9*12*#years) which is 535GB in a year, 967GB in five years (again assuming a linear growth).

Hopefully with EIP-4844 this growth might slow down, since L2s will stop using CALLDATA (thus transaction size) for data availability and move to blobs which expire after a few weeks.

EIP-4444 will solve the history growth problem since full nodes don’t need to store all of the history anymore. Implementing EIP-4444 requires a robust network for retrieving the history before we can allow full nodes to stop serving the history though.

Sync times

The gas limit impacts sync times in multiple ways:

- Full sync becomes way slower, it takes a geth node more than a week to fully sync the chain, other clients have optimized the full sync better

- Syncing the history is slower. Because we need to download more data, the historical part of the sync is slower

- Snap syncing the state is slower since we need to download more state

- Snap healing is slower. Since the pivot point moves during snap sync, we have a lot of incomplete states on disk that need to be healed. This healing phase is slower if the pivot moves more often and if more changes are applied each block

- Catching up with the chain is slower since a node needs to crank through more changes to get to the head

Client diversity

Building a new EL client is already a monumental task. Increasing the gas limit has the additional drawback that it makes building the client and optimizing it for mainnet use harder. Geth has had 10+ years of development which included a lot of optimizations. The counterargument here would be that new clients can learn from existing clients and not make the same mistakes.

However we’re already seeing two clients (Execution Specs; a client written in python and EthereumJS a javascript client) struggle with mainnet. This also means that right now clients in certain languages are not possible anymore. Increasing the gas limit will make it harder for some clients to keep up simply because of language overhead and library maturity.

We’ve also seen this with KZG, where in order to get the needed performance most clients rely on calls to C-KZG, a library written in C, instead of using a library written in their language of choice.

Worst case

When thinking about the gas limit, we can not look at the average case. We always have to look at the worst case. Sure a node might chug along nicely when the chain has an average load, but what happens if all of a sudden the disk I/O is doubled for 5 blocks in a row? How will the network fare with 5 second blocks.

edit: I removed a table at this point which showed runtimes of >4 seconds for a full 30MGas block in older versions of clients under old chain rules

The runtime is not the only metric that we need to look at, if an attacker could hog other resources, like disk i/o, cpu time or memory, they could force lower provisioned machines offline. Especially post-merge with two clients running on the same machine, attacking one could cause instability in the other client. In the early days of merge testing we saw this a few times where a memory leak in one client brought down the whole system.

Another worst-case to consider is the proof size. With an increased gas limit, the number of potential state changes that can occur between two blocks increases. This has effects regarding the snap sync as discussed earlier, but it also impacts the size of the proof for execution layer light clients. This is not a big deal right now, since proofs for the merkle-patricia tree are too big already to send over the network. However if we want to implement the cross-validation idea of running multiple light clients on the same machine, the proof size starts to matter.

Solutions

Are we doomed? Will we always stay at a 30MGas limit? Not really!

In my previous post from 2021 I proposed solutions for the problems we faced at that time. For the full sync issues we faced in 2021 geth implemented snap sync and snapshots. For the issues with pruning and the database layout, geth implemented PBSS. The Txpool got more robust at handling high transaction loads and the bulk of MEV frontrunning transactions moved to the builders. A lot of transactions also moved to L2s, which in turn increased the average size of transactions on mainnet.

The only solution that was not implemented yet is regenesis. Over the years the viewpoints changed a bit and it looks like most people favor EIP-4444 history expiry for a short-term solution for history growth. For EIP-4444 to ship, we need a robust network of history serving nodes, so that the history does not get lost, even if it is not stored by every full node anymore (btw. most bitcoin nodes do not store the history at all).

We still have not found a decent and realistically way for state expiry. There are a few interesting approaches, so I would encourage everyone who’s interested to join the #state-expiry on the Eth R&D discord.

As you’ve seen with the attack pre-shanghai, there were a few known attacks that prevented us from raising the gaslimit. All of them (to the best of my knowledge) have been fixed.

At the time of this writing, EIP-4844 is being launched on testnets. This EIP will increase the storage and I/O requirements of a node. In my opinion it would be safest to wait for the fallout of this change on mainnet before attempting any type of gas limit increase. Once L2s moved to Blob transactions, we should increase the cost of calldata (as calldata is underpriced compared to other things the data needs to store in my opinion). This would also serve as a forcing function for L2s to use blobspace.

All in all I would like to caution everyone to tread carefully when considering gas limit increases as it effects many different areas of the node, some more obvious than others. And it is very important in this debate to consider the long and short-term effects of gas limit changes.